Detiding

Get the lesson R script: detiding.R

Get the lesson data: download zip

Lesson Outline

Lesson Exercises

Goals and Motivation

This lesson will teach you how to use the SWMPr package to import SWMP data and how to use the WtRegDO package to detide a dissolved oxygen time series. We’ll also be using packages in the tidyverse to wrangle the data for use with the WtRegDO functions. Background information on SWMPr is here and background information on detiding is here.

By the end of this lesson, you should know how or be able to find resources to do the following:

- Load SWMPr and import data from Apalachicola and Sapelo Island using the

import_localfunction - Clean the SWMP data with

qaqcand combine datasets withcomb - Use the dplyr package from the tidyverse to prepare the data for WtRegDO

- Understand if dissolved oxygen is correlated with tide and how WtRegDO “removes” the tidal signal

- Use WtRegDO to detide the DO time series

Data import with SWMPr

Last time we got comfortable with R basics by exploring how to get around the RStudio console, understanding the basics of the R language, and importing datasets for analysis. Now that we have the basics out of the way, we can use some existing packages to work specifically with SWMP data.

The SWMPr package was developed a few years ago to provide a bridge between the raw data and the R analysis platform. It does this with some degree of success, but by far it’s most useful feature is being able to import and combine time series from SWMP sites with relative ease. In particular, the import_local, qaqc, and comb functions allow us to quickly import, clean up, and combine datasets for follow-up analysis. This is all we’ll do with SWMPr in these lessons.

The import_local function is designed to work with data downloaded from the CDMO using the Zip Downloads feature from the Advanced Query System. The files we have in our “data” folder were requested from this feature for all Apalachicola and Sapelo Island data from 2017 to 2019.

The import_local function has two arguments: path to indicate where the data are located and station_code to indicate which station to import. We first load SWMPr and then use the function to import data for Apalachicola Dry Bar station:

# load SWMPr

library(SWMPr)

# import data

apadbwq <- import_local(path = 'data', station_code = 'apadbwq')

# characteristics of the dataset

head(apadbwq)## datetimestamp temp f_temp spcond f_spcond sal f_sal do_pct f_do_pct

## 1 2017-01-01 00:00:00 15.9 <0> 34.99 <0> 22.1 <0> 104.1 <0>

## 2 2017-01-01 00:15:00 15.9 <0> 34.94 <0> 22.0 <0> 104.0 <0>

## 3 2017-01-01 00:30:00 15.9 <0> 34.90 <0> 22.0 <0> 103.5 <0>

## 4 2017-01-01 00:45:00 15.9 <0> 34.94 <0> 22.0 <0> 103.3 <0>

## 5 2017-01-01 01:00:00 15.9 <0> 34.99 <0> 22.1 <0> 104.1 <0>

## 6 2017-01-01 01:15:00 15.9 <0> 35.08 <0> 22.1 <0> 103.9 <0>

## do_mgl f_do_mgl depth f_depth cdepth f_cdepth level f_level clevel f_clevel

## 1 9.0 <0> 1.88 <0> 1.81 <3> NA <-1> NA <NA>

## 2 9.0 <0> 1.88 <0> 1.81 <3> NA <-1> NA <NA>

## 3 9.0 <0> 1.89 <0> 1.82 <3> NA <-1> NA <NA>

## 4 8.9 <0> 1.89 <0> 1.83 <3> NA <-1> NA <NA>

## 5 9.0 <0> 1.90 <0> 1.84 <3> NA <-1> NA <NA>

## 6 9.0 <0> 1.90 <0> 1.84 <3> NA <-1> NA <NA>

## ph f_ph turb f_turb chlfluor f_chlfluor

## 1 8.3 <0> 15 <0> NA <-1>

## 2 8.2 <0> 15 <0> NA <-1>

## 3 8.3 <0> 13 <0> NA <-1>

## 4 8.3 <0> 16 <0> NA <-1>

## 5 8.3 <0> 10 <0> NA <-1>

## 6 8.3 <0> 14 <0> NA <-1>dim(apadbwq)## [1] 105120 25range(apadbwq$datetimestamp)## [1] "2017-01-01 00:00:00 EST" "2019-12-31 23:45:00 EST"Note that this function was able to import and combine data from multiple csv files. We would have had to do this by hand if we were importing data with more general import functions available in R (e.g., read_csv from last time).

Cleaning and combining SWMP data

Each row has data for multiple parameters at 15 minute intervals. Each parameter also includes a column with QAQC flags, i.e., f_ then the parameter name. We can use the qaqc function to “screen” observations with specific QAQC flags. You can view what the flags mean at this website: http://cdmo.baruch.sc.edu/data/qaqc.cfm. We’ll keep all data that have the flags 0, 1, 2, 3, 4, and 5 by indicating this information in the qaqc_keep argument (in practice, you may only want to keep data with a “zero” flag). You can view a tabular summary of the flags in a dataset using the qaqcchk function.

# keep only observations that passed qaqc chekcs

apadbwq <- qaqc(apadbwq, qaqc_keep = c('0', '1', '2', '3', '4', '5'))

# check the results

head(apadbwq)## datetimestamp temp spcond sal do_pct do_mgl depth cdepth level clevel

## 1 2017-01-01 00:00:00 15.9 34.99 22.1 104.1 9.0 1.88 1.81 NA NA

## 2 2017-01-01 00:15:00 15.9 34.94 22.0 104.0 9.0 1.88 1.81 NA NA

## 3 2017-01-01 00:30:00 15.9 34.90 22.0 103.5 9.0 1.89 1.82 NA NA

## 4 2017-01-01 00:45:00 15.9 34.94 22.0 103.3 8.9 1.89 1.83 NA NA

## 5 2017-01-01 01:00:00 15.9 34.99 22.1 104.1 9.0 1.90 1.84 NA NA

## 6 2017-01-01 01:15:00 15.9 35.08 22.1 103.9 9.0 1.90 1.84 NA NA

## ph turb chlfluor

## 1 8.3 15 NA

## 2 8.2 15 NA

## 3 8.3 13 NA

## 4 8.3 16 NA

## 5 8.3 10 NA

## 6 8.3 14 NAdim(apadbwq)## [1] 105120 13range(apadbwq$datetimestamp)## [1] "2017-01-01 00:00:00 EST" "2019-12-31 23:45:00 EST"Notice that the number of rows are the same as before - no rows are removed by qaqc. Values that did not fit the screening criteria are given a NA value. Also notice the flag columns are removed.

Our metabolism functions also require weather data. We can repeat the steps above to import and clean data from the weather station at Apalachicola.

# import weather data, clean it up

apaebmet <- import_local(path = 'data', station_code = 'apaebmet')

apaebmet <- qaqc(apaebmet, qaqc_keep = c('0', '1', '2', '3', '4', '5'))

# check the results

head(apaebmet)## datetimestamp atemp rh bp wspd maxwspd wdir sdwdir totpar totprcp

## 1 2017-01-01 00:00:00 15.7 89 1020 4.8 6.7 97 10 0 0

## 2 2017-01-01 00:15:00 15.8 90 1020 4.9 6.5 103 9 0 0

## 3 2017-01-01 00:30:00 16.1 91 1020 3.8 5.8 107 11 0 0

## 4 2017-01-01 00:45:00 16.5 92 1019 3.3 6.4 119 15 0 0

## 5 2017-01-01 01:00:00 16.9 93 1019 5.3 8.6 113 9 0 0

## 6 2017-01-01 01:15:00 17.3 93 1019 3.9 5.6 118 10 0 0

## totsorad

## 1 NA

## 2 NA

## 3 NA

## 4 NA

## 5 NA

## 6 NAdim(apaebmet)## [1] 105120 11range(apaebmet$datetimestamp)## [1] "2017-01-01 00:00:00 EST" "2019-12-31 23:45:00 EST"The comb function in SWMPr lets us combine data from two locations using the datetimestamp column. We’ll need to do this to use the functions in the WtRegDO package that require both water quality and weather data.

There are a couple of arguments to consider when using the comb function. First, the timestep argument defines the time step for the resulting output. Keep this at 15 to retain all of the data. You could use a larger time step to subset the data if, for example, we wanted data every 60 minutes. Second, the method argument defines how two datasets with different date ranges are combined. Use method = 'union' to retain the entire date range across both datasets or use method = 'intersect' to retain only the dates that include data from both datasets. For our example, union and intersect produce the same results since the date ranges are the same.

To speed up the examples in our lesson, we’ll use a 60 minute timestep. In practice, it’s better to retain all of the data (i.e., timestep = 15).

# combine water quality and weather data

apa <- comb(apadbwq, apaebmet, timestep = 60, method = 'union')

# check the results

head(apa)## datetimestamp temp spcond sal do_pct do_mgl depth cdepth level clevel

## 1 2017-01-01 00:00:00 15.9 34.99 22.1 104.1 9.0 1.88 1.81 NA NA

## 2 2017-01-01 01:00:00 15.9 34.99 22.1 104.1 9.0 1.90 1.84 NA NA

## 3 2017-01-01 02:00:00 15.9 35.22 22.2 103.5 9.0 1.94 1.89 NA NA

## 4 2017-01-01 03:00:00 16.0 36.95 23.4 100.1 8.6 2.00 1.95 NA NA

## 5 2017-01-01 04:00:00 16.0 37.10 23.5 99.7 8.5 2.01 1.96 NA NA

## 6 2017-01-01 05:00:00 16.1 37.19 23.6 98.8 8.4 2.01 1.96 NA NA

## ph turb chlfluor atemp rh bp wspd maxwspd wdir sdwdir totpar totprcp

## 1 8.3 15 NA 15.7 89 1020 4.8 6.7 97 10 0 0

## 2 8.3 10 NA 16.9 93 1019 5.3 8.6 113 9 0 0

## 3 8.3 13 NA 18.3 94 1018 3.9 5.2 121 7 0 0

## 4 8.2 22 NA 19.0 92 1018 4.6 6.3 151 8 0 0

## 5 8.2 28 NA 19.5 90 1018 5.0 6.8 153 9 0 0

## 6 8.2 11 NA 19.4 90 1018 4.2 6.3 151 9 0 0

## totsorad

## 1 NA

## 2 NA

## 3 NA

## 4 NA

## 5 NA

## 6 NAdim(apa)## [1] 26281 23range(apa$datetimestamp)## [1] "2017-01-01 EST" "2020-01-01 EST"Exercise 1

Repeat the above examples but do this using data for Sapelo Island. Import data for sapdcwq and sapmlmet, clean them up with qaqc, and combine them with comb.

- Open a new script in your RStudio project, save it with an informative title (e.g,

import-detiding.R), and add a section header withCtrl + Shift + R. - Load the SWMPr package with the

libraryfunction. This should already be installed from last time (i.e.,install.packages('SWMPr')). - Import and clean up

sapdcwqwithimport_localandqaqc. - Import and clean up

sapmlmetwithimport_localandqaqc. - Combine the two with

comb. Use a 60 minute time step and use theunionoption.

Preparing SWMP data for detiding

Now we want to setup our data for use with functions in the WtRegDO package. As with most R functions, the input formats are very specific requiring us to make sure the column names, locations, and types of columns in our data are exactly as needed. The two functions in WtRegDO that we’ll use in this lesson are evalcor and wtreg. You can look at the examples in the help file for these functions to see what format we need (?evalcor, ?wtreg).

The WtRegDO package comes with an example dataset that has a format we can follow for our SWMP data. First we load WtRegDO and then examine the SAPDC example dataset. This is the same site we used for the previous exercise, just with older data.

# load WtRegDO

library(WtRegDO)

# view first six rows of SAPDC

head(SAPDC)## DateTimeStamp Temp Sal DO_obs ATemp BP WSpd Tide

## 1 2012-01-01 00:00:00 14.9 33.3 5.0 11.9 1022 0.5 0.8914295

## 2 2012-01-01 00:30:00 14.9 33.4 5.5 11.3 1022 0.6 1.0011830

## 3 2012-01-01 01:00:00 14.9 33.4 5.9 9.9 1021 0.6 1.0728098

## 4 2012-01-01 01:30:00 14.8 33.3 6.4 10.0 1022 2.4 1.1110885

## 5 2012-01-01 02:00:00 14.7 33.2 6.6 11.4 1022 1.3 1.1251628

## 6 2012-01-01 02:30:00 14.7 33.3 6.1 10.7 1021 0.0 1.1223799# View the structure of SAPDC

str(SAPDC)## 'data.frame': 17568 obs. of 8 variables:

## $ DateTimeStamp: POSIXct, format: "2012-01-01 00:00:00" "2012-01-01 00:30:00" ...

## $ Temp : num 14.9 14.9 14.9 14.8 14.7 14.7 14.6 14.6 14.4 14.2 ...

## $ Sal : num 33.3 33.4 33.4 33.3 33.2 33.3 33.4 33.4 33.3 33.3 ...

## $ DO_obs : num 5 5.5 5.9 6.4 6.6 6.1 5.7 5.2 4.6 4 ...

## $ ATemp : num 11.9 11.3 9.9 10 11.4 10.7 10.2 8.9 10.4 11 ...

## $ BP : num 1022 1022 1021 1022 1022 ...

## $ WSpd : num 0.5 0.6 0.6 2.4 1.3 0 0 0 0 0 ...

## $ Tide : num 0.891 1.001 1.073 1.111 1.125 ...We can compare this to our Apalachicola dataset from before.

# view first six rows of apa

head(apa)## datetimestamp temp spcond sal do_pct do_mgl depth cdepth level clevel

## 1 2017-01-01 00:00:00 15.9 34.99 22.1 104.1 9.0 1.88 1.81 NA NA

## 2 2017-01-01 01:00:00 15.9 34.99 22.1 104.1 9.0 1.90 1.84 NA NA

## 3 2017-01-01 02:00:00 15.9 35.22 22.2 103.5 9.0 1.94 1.89 NA NA

## 4 2017-01-01 03:00:00 16.0 36.95 23.4 100.1 8.6 2.00 1.95 NA NA

## 5 2017-01-01 04:00:00 16.0 37.10 23.5 99.7 8.5 2.01 1.96 NA NA

## 6 2017-01-01 05:00:00 16.1 37.19 23.6 98.8 8.4 2.01 1.96 NA NA

## ph turb chlfluor atemp rh bp wspd maxwspd wdir sdwdir totpar totprcp

## 1 8.3 15 NA 15.7 89 1020 4.8 6.7 97 10 0 0

## 2 8.3 10 NA 16.9 93 1019 5.3 8.6 113 9 0 0

## 3 8.3 13 NA 18.3 94 1018 3.9 5.2 121 7 0 0

## 4 8.2 22 NA 19.0 92 1018 4.6 6.3 151 8 0 0

## 5 8.2 28 NA 19.5 90 1018 5.0 6.8 153 9 0 0

## 6 8.2 11 NA 19.4 90 1018 4.2 6.3 151 9 0 0

## totsorad

## 1 NA

## 2 NA

## 3 NA

## 4 NA

## 5 NA

## 6 NA# view the structure of apa

str(apa)## Classes 'swmpr' and 'data.frame': 26281 obs. of 23 variables:

## $ datetimestamp: POSIXct, format: "2017-01-01 00:00:00" "2017-01-01 01:00:00" ...

## $ temp : num 15.9 15.9 15.9 16 16 16.1 16.2 16.2 16.1 16.3 ...

## $ spcond : num 35 35 35.2 37 37.1 ...

## $ sal : num 22.1 22.1 22.2 23.4 23.5 23.6 23.7 23.2 22.4 23.3 ...

## $ do_pct : num 104.1 104.1 103.5 100.1 99.7 ...

## $ do_mgl : num 9 9 9 8.6 8.5 8.4 8.1 8.1 8.2 7.9 ...

## $ depth : num 1.88 1.9 1.94 2 2.01 2.01 1.95 1.89 1.81 1.74 ...

## $ cdepth : num 1.81 1.84 1.89 1.95 1.96 1.96 1.9 1.84 1.75 1.68 ...

## $ level : num NA NA NA NA NA NA NA NA NA NA ...

## $ clevel : num NA NA NA NA NA NA NA NA NA NA ...

## $ ph : num 8.3 8.3 8.3 8.2 8.2 8.2 8.1 8.1 8.1 8.1 ...

## $ turb : num 15 10 13 22 28 11 23 26 26 27 ...

## $ chlfluor : num NA NA NA NA NA NA NA NA NA NA ...

## $ atemp : num 15.7 16.9 18.3 19 19.5 19.4 19.3 19.7 19.7 20.3 ...

## $ rh : num 89 93 94 92 90 90 91 89 90 90 ...

## $ bp : num 1020 1019 1018 1018 1018 ...

## $ wspd : num 4.8 5.3 3.9 4.6 5 4.2 4.3 5.3 4.9 5.6 ...

## $ maxwspd : num 6.7 8.6 5.2 6.3 6.8 6.3 5.6 7.5 7.2 7.5 ...

## $ wdir : num 97 113 121 151 153 151 147 153 155 156 ...

## $ sdwdir : num 10 9 7 8 9 9 8 9 10 9 ...

## $ totpar : num 0 0 0 0 0 ...

## $ totprcp : num 0 0 0 0 0 0 0 0 0 0 ...

## $ totsorad : num NA NA NA NA NA NA NA NA NA NA ...

## - attr(*, "station")= chr [1:2] "apadbwq" "apaebmet"

## - attr(*, "parameters")= chr [1:22] "temp" "spcond" "sal" "do_pct" ...

## - attr(*, "qaqc_cols")= logi FALSE

## - attr(*, "cens_cols")= logi FALSE

## - attr(*, "date_rng")= POSIXct[1:2], format: "2017-01-01" "2020-01-01"

## - attr(*, "timezone")= chr "America/Jamaica"

## - attr(*, "stamp_class")= chr [1:2] "POSIXct" "POSIXt"So, we need to do a few things to our Apalachicola dataset to make it look like SAPDC so it will work with the functions. We can use the dplyr package to “wrangle” the data into the correct format (here’s a useful cheatsheet for this package). The dplyr package comes with the tidyverse.

All we need to do is rename the columns and select the ones we want in the correct order. This can all be done with the select function in dplyr. Remember, we are trying to follow the exact format of the SAPDC dataset that comes with WtRegDO.

# load dplyr

library(dplyr)

# select and rename columns

apa <- select(apa,

DateTimeStamp = datetimestamp,

Temp = temp,

Sal = sal,

DO_obs = do_mgl,

ATemp = atemp,

BP = bp,

WSpd = wspd,

Tide = depth

)

# show first six rows

head(apa)## DateTimeStamp Temp Sal DO_obs ATemp BP WSpd Tide

## 1 2017-01-01 00:00:00 15.9 22.1 9.0 15.7 1020 4.8 1.88

## 2 2017-01-01 01:00:00 15.9 22.1 9.0 16.9 1019 5.3 1.90

## 3 2017-01-01 02:00:00 15.9 22.2 9.0 18.3 1018 3.9 1.94

## 4 2017-01-01 03:00:00 16.0 23.4 8.6 19.0 1018 4.6 2.00

## 5 2017-01-01 04:00:00 16.0 23.5 8.5 19.5 1018 5.0 2.01

## 6 2017-01-01 05:00:00 16.1 23.6 8.4 19.4 1018 4.2 2.01# view structure

str(apa)## Classes 'swmpr' and 'data.frame': 26281 obs. of 8 variables:

## $ DateTimeStamp: POSIXct, format: "2017-01-01 00:00:00" "2017-01-01 01:00:00" ...

## $ Temp : num 15.9 15.9 15.9 16 16 16.1 16.2 16.2 16.1 16.3 ...

## $ Sal : num 22.1 22.1 22.2 23.4 23.5 23.6 23.7 23.2 22.4 23.3 ...

## $ DO_obs : num 9 9 9 8.6 8.5 8.4 8.1 8.1 8.2 7.9 ...

## $ ATemp : num 15.7 16.9 18.3 19 19.5 19.4 19.3 19.7 19.7 20.3 ...

## $ BP : num 1020 1019 1018 1018 1018 ...

## $ WSpd : num 4.8 5.3 3.9 4.6 5 4.2 4.3 5.3 4.9 5.6 ...

## $ Tide : num 1.88 1.9 1.94 2 2.01 2.01 1.95 1.89 1.81 1.74 ...We can also verify the column names are the same between the two datasets. Note the use of two equal signs - this is how we tell R to test for equality.

names(apa) == names(SAPDC)## [1] TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUEFinally, we need to deal with pesky missing data values. Most functions in R deal with missing data by either not working at all or providing some work-around to accommodate the missing values. The functions in WtRegDO are in the former category.

Missing data in SWMP are not uncommon and they often occur when sondes or other equipment are down for maintenance or are otherwise broken for a period of time. So, missing observations usually come in blocks where all parameters are unavailable, as opposed to only one parameter. We can quickly remove rows with missing data using the na.omit function. Note that this will remove an entire row where even one parameter is missing data. Usually this is not ideal for most data, but this is okay for our examples because we require all parameters at a time step to estimate metabolism.

Here we see an example of a chunk of missing observations.

apa[which(is.na(apa$Tide))[1:5], ]## DateTimeStamp Temp Sal DO_obs ATemp BP WSpd Tide

## 1594 2017-03-08 09:00:00 NA NA NA 19.8 1026 1.9 NA

## 7455 2017-11-07 14:00:00 NA NA NA 26.1 1018 4.3 NA

## 13499 2018-07-17 10:00:00 NA NA NA 29.7 1018 2.3 NA

## 14823 2018-09-10 14:00:00 NA NA NA 28.0 1014 2.0 NA

## 14824 2018-09-10 15:00:00 NA NA NA 29.5 1014 1.5 NANow we just remove the rows with missing data using na.omit.

# number of rows before

nrow(apa)## [1] 26281# omit missing rows

apa <- na.omit(apa)

# number of rows after

nrow(apa)## [1] 13988Exercise 2

Repeat the above examples but use the combined dataset for Sapelo Island that you created in Exercise 1.

- Create a new section in your script using

Ctrl + Shift + rand give it an appropriate name. - Load the dplyr package with the library function.

- Simultaneously rename and select the date/time columns (

DateTimeStamp = datetimestamp), water temperature (Temp = temp), salinity (Sal = sal), dissolved oxygen (DO_obs = do_mgl), air temperature (ATemp = atemp), barometric pressure (BP = bp), wind speed (WSpd = wspd), and tidal height (Tide = depth) columns in the Sapelo Island dataset with theselectfunction from dplyr. Don’t forget to assign the new dataset to an object in your workspace (with<-). - Remove missing rows with

na.omit. How many rows were removed (e.g.,nrowbefore and after)?

What is and why detiding

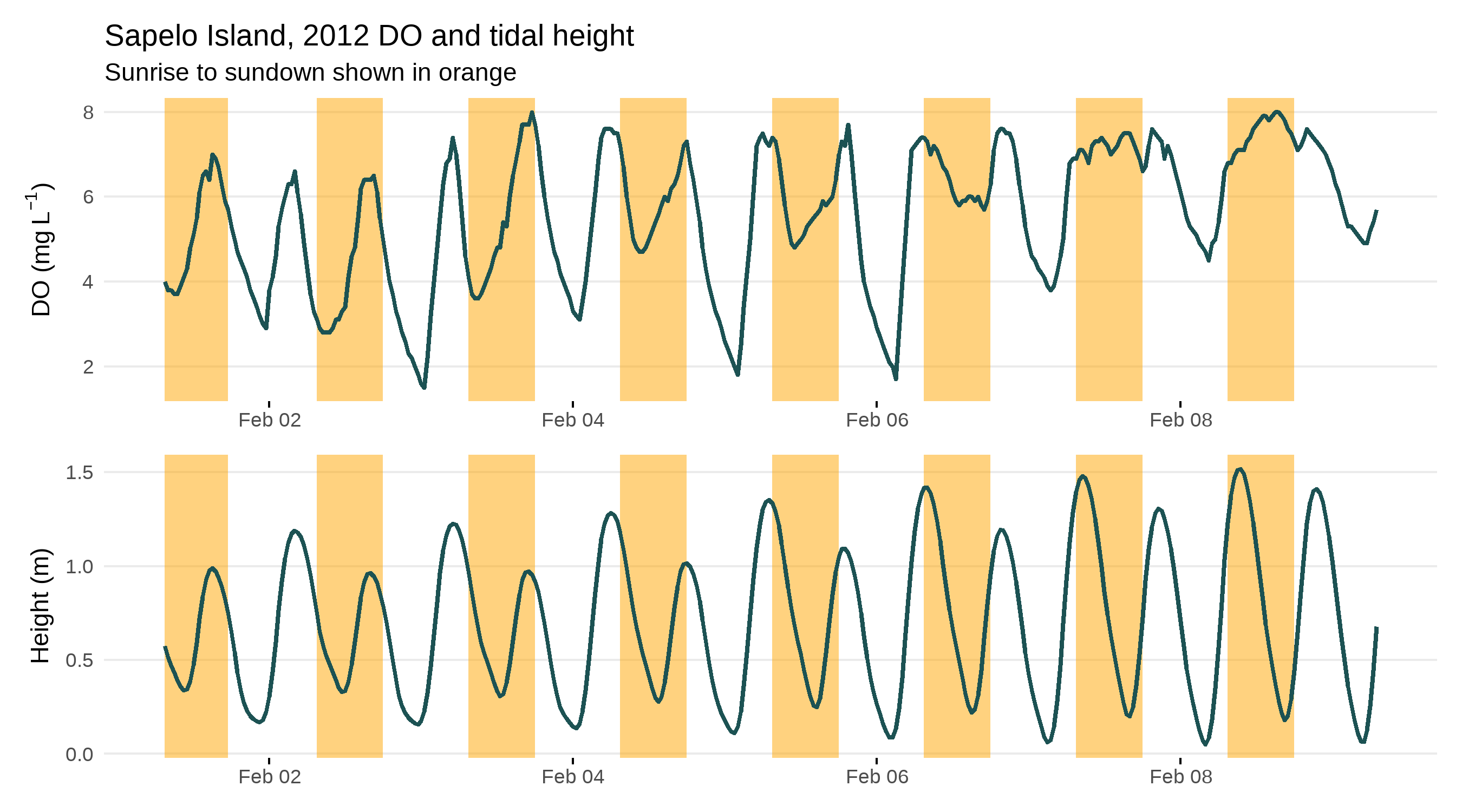

Now we can start using the detiding functions in WtRegDO. The motivation is that the dissolved oxygen time series is correlated with the tide and we want to remove that signal prior to estimating metabolism. A fundamental assumption of the methods we use to estimate metabolism is that the data represents a sample from the same place over time. If a monitoring sonde is measuring dissolved oxygen moving past the sensor with changes in the tide, then we are potentially evaluating parcels of water with different metabolic histories.

Quite literally, in order for the metabolism functions to work well, the DO time series should have a noticeable diel signal. DO should start increasing with sunrise as photosynthesis/production begins, it should peak around mid-day when temperature and solar radiation is highest, and it should decrease with sundown and into the night as respiration processes dominate. A DO time series influenced by the tide will typically not follow this pattern. Metabolism estimates from DO data with significant tidal influence will often appear as “anomalous”, having negative values for production and positive values for respiration. We’ll discuss more about this next time.

Here’s an example from Sapelo Island demonstrating the problem.

Detiding works by using a regression approach to estimate a “signal” of the tide in the DO time series and then removing that signal to produce a “normalized” DO time series. This normalized time series is thought to represent a biological signal as opposed to both a biological and tidal signal in the observed DO time series. The method is based off of work in Hirsch et al. 2010 using a moving window, weighted regression approach that fits a unique model to each point in the time series. Each unique model is fit using a set of weights that determine the influence of observations in the window to the model fit at the center of the window. For detiding, these weights are determined by the time of day, number of days in the window, and a range of observations for the tidal height. The “widths” of these windows have a direct effect on how well the model can detide the DO data.

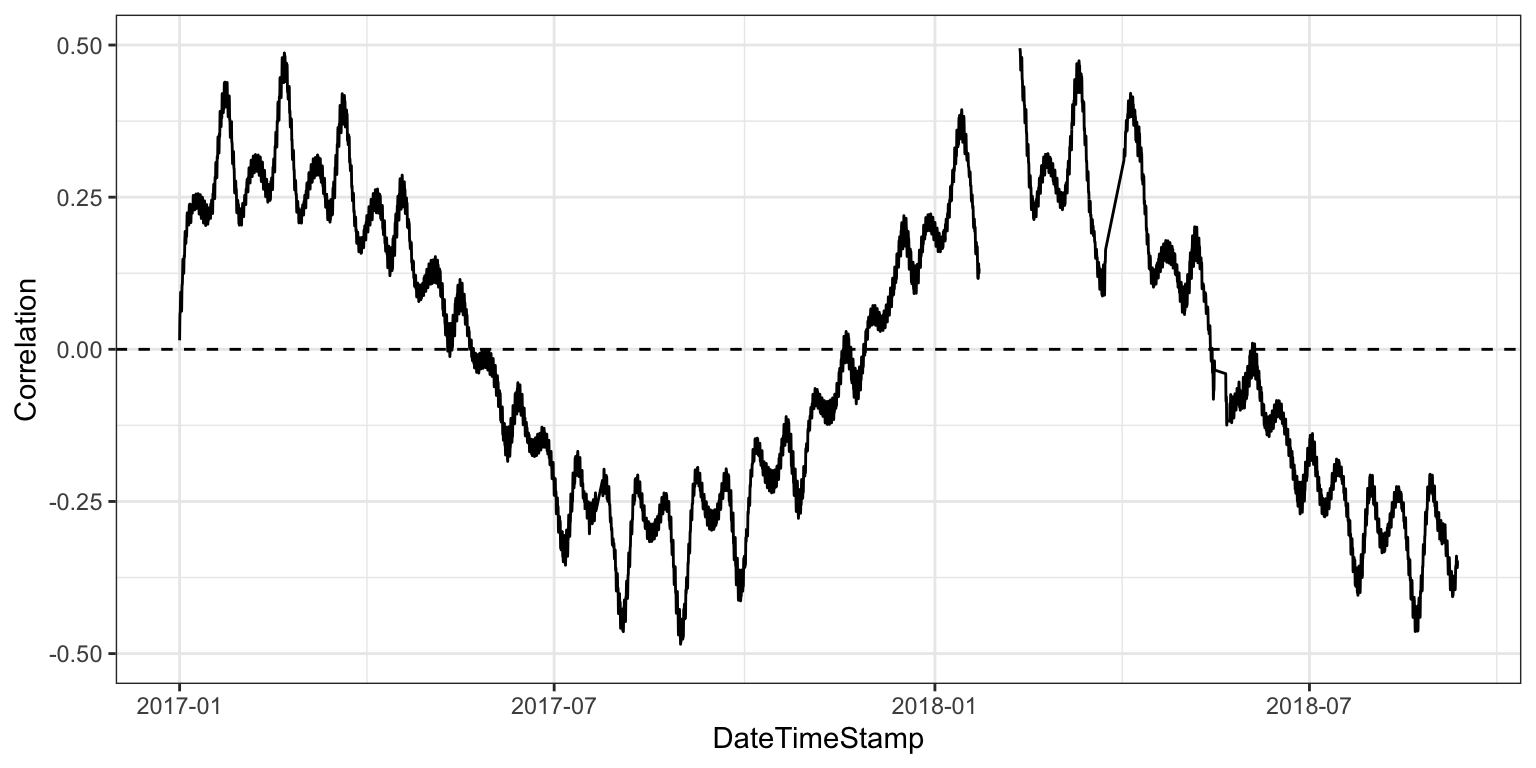

An additional consideration for detiding is that it does not work well during all times of the year. The model is likely to remove a portion of the biological signal at times of the year when the tidal height is correlated with the sun angle. In other words, when periods of peak productivity are correlated with tidal height, it’s impossible to isolate and remove only the tidal signal. The end result is a normalized DO time series that is likely to underestimate metabolism because a portion of the biological signal was also removed from the observed DO during detiding.

Finally, detiding may not even be necessary for a DO time series. If you have a suspicion that your DO data are tidally influenced, it’s best to verify this before blank application of the detiding functions. A simple plot of dissolved oxygen with the tide over time can be used to evaluate a potential correlation. It can be better to estimate metabolism without detiding then to detide and estimate metabolism if there is no or a minimal tidal influence.

For all of these reasons, detiding is an art that may require several iterations of model fitting to get the most desirable result. Evaluating model fit is best achieved through evaluation of the metabolism estimates and we save that discussion for the next lesson.

Using WtRegDO to detide

If you have strong reason to believe your data are tidally influenced, first use the evalcor function to “evaluate the correlation” between tidal change and the sun angle. These correlations will help you identify periods of time when you might expect the detiding functions to not work well by removing both the tidal and biological signal.

The evalcor function requires an input dataset (as prepared from before), the time zone at the location, and the location lat/lon. The time zone can be extracted from the DateTimeStamp column of the dataset. The latitude and longitude can be found from the metadata in the zipped folder that was downloaded from CDMO or using the site_codes() function from SWMPr. If using the latter approach, you’ll need to register your computer’s IP address with CDMO.

Site location info is needed so that the evalcor function can identify the angle of the sun at the location and time for each observation. The function may take a few minutes to run.

# get time zone

tz <- attr(apa$DateTimeStamp, which = 'tzone')

lat <- 29.6747

long <- -85.0583

evalcor(apa, tz = tz, lat = lat, long = long)

What we get is a time series of the correlation of tidal height with the sun angle. We can expect the detiding method to work best when this correlation is at or close to zero. So, in this case, detiding may not work as well in middle to late summer and early spring. For periods of the year when detiding may not work, interpret the results with caution, or simply do not use detiding during those parts of the year. There is no specific rule defining a maximum acceptable correlation for detiding, but Beck et al. 2015 suggest time periods when correlations exceed +/- 0.2 may be problematic.

Now that we have an expectation for when detiding could work well, we can use the wtreg function to detide the DO time series. The same inputs for evalcor are used for wtreg. The rest of the arguments for wtreg are left at their default value, including the window widths for weighted regression. Running the function may also take a minute or two.

dtd <- wtreg(apa, tz = tz, lat = lat, long = long)The output from wtreg is similar to the input dataset, but with some additional columns added. The two most important ones are DO_prd and DO_nrm for the predicted DO time series from your observed data and DO_nrm for the “normalized” or detided time series.

head(dtd)## DateTimeStamp Temp Sal DO_obs ATemp BP WSpd Tide metab_date

## 1 2017-01-01 00:00:00 15.9 22.1 9.0 15.7 1020 4.8 1.88 2016-12-31

## 2 2017-01-01 01:00:00 15.9 22.1 9.0 16.9 1019 5.3 1.90 2016-12-31

## 3 2017-01-01 02:00:00 15.9 22.2 9.0 18.3 1018 3.9 1.94 2016-12-31

## 4 2017-01-01 03:00:00 16.0 23.4 8.6 19.0 1018 4.6 2.00 2016-12-31

## 5 2017-01-01 04:00:00 16.0 23.5 8.5 19.5 1018 5.0 2.01 2016-12-31

## 6 2017-01-01 05:00:00 16.1 23.6 8.4 19.4 1018 4.2 2.01 2016-12-31

## solar_period solar_time day_hrs dec_time hour Beta2

## 1 sunset 2016-12-31 22:51:56 10.27807 -365.0000 0 -0.661774387

## 2 sunset 2016-12-31 22:51:56 10.27807 -364.9583 1 -0.197013200

## 3 sunset 2016-12-31 22:51:56 10.27807 -364.9167 2 0.035692964

## 4 sunset 2016-12-31 22:51:56 10.27807 -364.8750 3 -0.002937492

## 5 sunset 2016-12-31 22:51:56 10.27807 -364.8333 4 -0.187566704

## 6 sunset 2016-12-31 22:51:56 10.27807 -364.7917 5 -0.406850558

## DO_prd DO_nrm

## 1 8.821057 8.954392

## 2 8.714655 8.760646

## 3 8.657867 8.653554

## 4 8.625646 8.636010

## 5 8.583420 8.656157

## 6 8.534384 8.678371In the next lesson, we’ll cover some ways to evaluate effectiveness of the detiding. For now, we can look at a simple plot to get a sense of how detiding altered the data. This example uses the plotly library, you’ll have to install it to recreate the plot.

library(plotly)

plot_ly(dtd, x = ~DateTimeStamp, y = ~DO_obs, name = 'Observed DO', type = 'scatter', mode = 'lines') %>%

add_trace(y = ~DO_nrm, name = 'Detided DO', mode = 'lines') %>%

layout(xaxis = list(title = NULL), yaxis = list(title = "DO (mg/L)"))There are a few things to note from the above graph. First, the “normalized” detided DO time series has a much more noticeable diel signal. This can often lead to less “anomalous” metabolism estimates. Second,the detided time series has a diel amplitude that is much less than the observed time series. This will usually be the case for detided data and the choice of window widths is important to provide a balance between removal of the tidal influence relative to the biological signal. We’ll discuss this balance next time.

Exercise 3

Try running the evalcor and wtreg functions on the Sapelo Island data from Exercise 2.

- Run

evalcorusingtz = 'America/Jamaica',lat = 31.39, andlong = -81.28. - From the plot, what times of the year might you expect detiding to not work very well?

- Run

wtregusing the same arguments in step 1. If you have plotly installed, try plotting the results to see how the “normalized” time series compares to the observed.

Summary

In this lesson we learned how to import data with SWMPr, prepare the data for analysis with WtRegDO, evaluate the DO time series with evalcor, and run weighted regression with wtreg to detide the data. Next time we’ll cover the basics of estimating ecosystem metabolism and how we can use those results to evaluate the detiding results.